In the previous post, we introduced AI model transparency – also referred to as interpretability – as one of the key characteristics of a trustworthy AI system. Interpretability has come to the forefront with advances in technology, such as decision optimization based on machine learning (ML) and deep learning (DL), that have changed the way we go about modeling, automating and executing our decision-making processes. Broadly speaking, interpretability is the degree of our understanding of the driving factors behind generated outcomes and our ability to make sense of the suggested decisions, both of which are critical foundations underpinning our trust in AI models.

Traditionally, we built systems around pre-set definitions and rules – assuming that the rules are clear, accurate and reliable and unlikely to be changed or improved frequently, if at all. Today, we are shifting gears from a “do as I say” to “let me know” type of approach, increasingly entrusting external AI-powered systems that support our decision-making. However, this transfer of power requires a certain level of assurance that the new AI-driven rules share the main characteristics with the traditional ones above. And that’s where interpretability challenges start to arise.

BREAKING DOWN AN INDUSTRY EXAMPLE

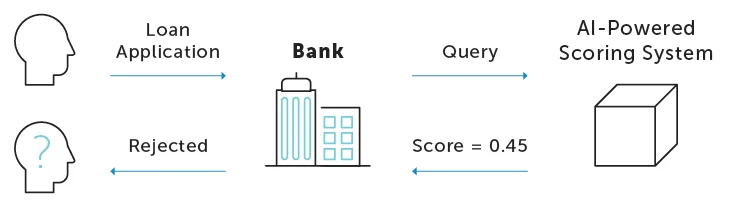

In certain industries like healthcare or financial services, regulating institutions require a high degree of interpretability in predictive models in addition to other dimensions, such as privacy, security and ethics. Let’s consider a loan application process in more detail. When a customer applies for a consumer loan and gets rejected with a score below a certain threshold, it may be either due to objective factors (insufficient income, low credit score, etc.) or because of certain biases innate to the models behind the AI-powered scoring system.

These biases can include:

- Using discriminative properties such as gender, nationality, marital status and residential address (“exclusion bias”)

- Leveraging proxies for discriminative properties such as occupation, travel itineraries, GPS data, frequently visited stores and shopped items, and frequently used mobile applications and in-app purchases (“proxy bias”)

- Not acknowledging the lack of fair representation in data for different groups such as low-income groups or minorities (“exclusion bias”)

- Mislabeling samples such as using policeman or steward instead of police officer and flight attendant for occupation, or mistagging samples by extracting discriminative attributes in resumes such as “woman’s chess club” (“labeling bias”)

In the process of ML model training, various factors influence the final decision. Staying with the loan example, let’s imagine a situation when the borrower’s nationality is one of the factors used in the training dataset that represents people of some nationalities (“exclusion bias”). As a result of the training, the ML model could take nationality into account during decision-making, thus discriminating against the people of the underrepresented or unspecified nationalities. Such a model would not only be unethical by design, but also poorly adaptable to the diversity of the real world.

While a careful examination of the quantitative and qualitative properties of the data before the modeling process (as well as a post-hoc analysis based on a user/QA feedback) could generally help address the challenges described above, having models with a high degree of interpretability would allow you to do this faster, more efficiently and more reliably.

A SIMPLE, NON-TECHNICAL ILLUSTRATION OF INTERPRETABILITY

A “black box” model could be used to prevent the previous loan scenario from happening. Let’s imagine that we have created a system that allows the user to identify animals from an image. The user has never seen cats, but the task is to separate cats from other animals.

With the « black box » ML model, the user receives the answer « this is a cat with a probability of X% » for a picture. Since our user has never seen cats, the user can only believe that the model is confident and accurate in this prediction.

With an interpretable ML model however, the user receives the same answer plus an explanation of why the model made this decision. The regions responsible for contributing to the prediction can be highlighted in the picture, a text description of the object in the picture can be created, and similar objects from the dataset are shown. In this case, the prediction of the model can be useful to the user. The user will be able not only to get the prediction, but also the reasons why such a prediction was made, as well as knowledge and insights about main factors that affect the prediction.

Since ML models are typically used to solve a business problem, every user of the model may not be interested in the details of the inner structure and complexity. Instead, some users use the model only as a tool for solving a specific task. Therefore, it’s extremely important for the model to be interpretable so any user (regardless of technical skill) can easily identify when the model can be trusted, why some decision was made and what happens if the input data gets changed.

INCREASING EXPLAINABILITY WITHOUT COMPROMISING PERFORMANCE

So, what can be done to increase the level of explainability while maintaining the high learning performance of models? There are three broad strategies devised for solving this trade-off challenge that when combined and applied to one or more model classes, help to achieve that objective.

- Deep explanation refers to altering DL model designs in ways that would make them more explainable – by adding new elements to different layers of the neural networks. For example, the original network employed to classify images may add image captions to the training set, to be further used by a second network generating explanations that wouldn’t require a human operator to understand the features and workings of the first network.

- Interpretable models seek to supplement DL with other models that are inherently explainable, such as probabilistic relational models based on Bayesian methods, graphical models or advanced decision trees. If combined intelligently, it could help create more structured or causal models.

- Model induction is a model-agnostic approach requiring you to treat the model as a “black box” and uses experimentation to try inferring something that explains its behavior. In essence, you don’t need to understand what exactly is happening under the hood of the model: It’s enough to play around with inputs around the scenario of interest and observe the model’s response (output), to develop a mental map of what to pay attention to when examining the recommended decisions.

Explainable AI should be a standard component of modern AI products and it can be integrated as a component of AutoML and ML monitoring. Using a variety of techniques to tackle these strategies, we can convert “black box” AI into an equally powerful “glass box” AI, not only promoting trust in the decisions supported by AI models, but also enabling more effective governance and risk management systems. This will be the focus of our next post.